As highlighted earlier replication will cover all system failures and redundant networking will cover cable and network card failures. But it will not cover the rack maintenance or network switch failures. Rack is nothing but container where we place multiple servers in it.

Also replication can only cover n-1 nodes in a m node cluster where m > n and n is replication factor.

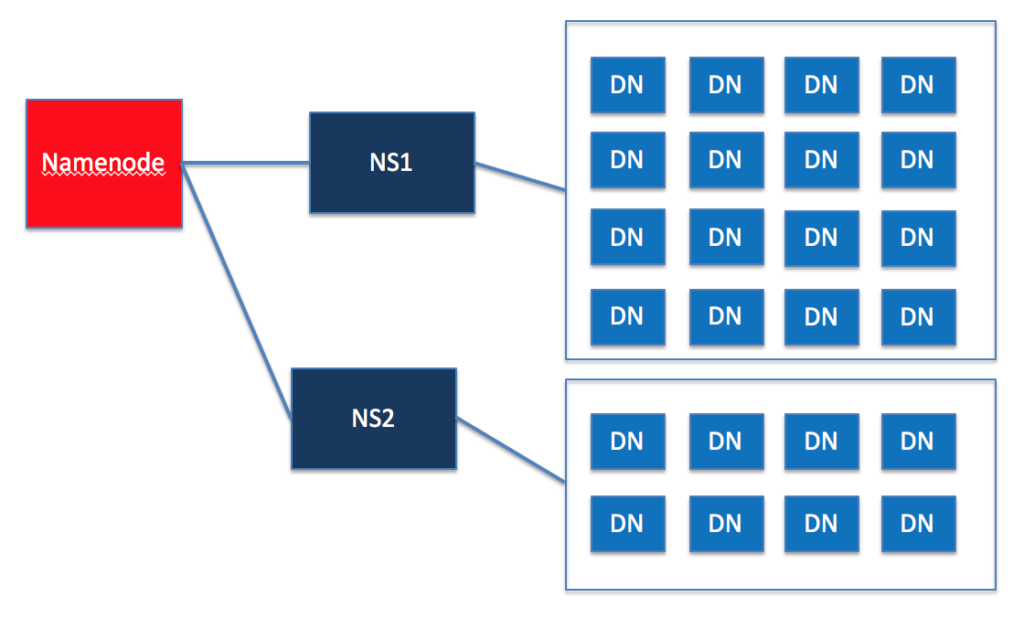

In the above mentioned cases (such as rack maintenance/outage as well as more than n nodes being down) entire cluster might have to be down. One way to address this issue is to have nodes in multiple racks and ensure that at least one copy of data will present in each of the rack.

To ensure blocks are copied to nodes in more than one rack, we need to make the cluster rack aware by assigning host to rack group using a script and a file which have mapping between node and logical group id of rack.

If the cluster is setup in 2 rack groups, we divide nodes in 2:1 (one rack group should have twice as many nodes as other rack).

We can assign the rack to the nodes in the cluster either using Cloudera Manager or Custom Script.

- When managed by distributions like Cloudera it is recommended to use Cloudera Manager.

- In either of the approach we will have Rack Topology Script and a file with mapping between node and logical rack group id.

- We should not randomly assign rack group id. All the servers in a rack should be pointing to same rack group id.

- As we have only 3 nodes, let us assume that bigdataserver-5 and bigdataserver-6 are on one rack group and other node on other rack group.

Using Cloudera Manager

- Go to HDFS and review HDFS Components

- Click on Hosts and go to Hosts

- All the Hosts will be shown

- Choose bigdataserver-5 and bigdataserver-6

- Go to Actions -> Assign rack and type /rack1. For production clusters, you need to pass actual information of rack details based up on the hardware in your enterprise.

- Repeat the step for bigdataserver-7 and assign it to /rack2.

- Deploy and Restart the service.

Using Script

If we plan to use the external script file, we can write shell or python script and give the script file path in HDFS configuration for the parameter “net.topology.script.file.name”.

- Create rack-topology.sh and rack-topology.data under /etc/hadoop/conf

- Define ‘net.topology.script.file.name’ in core-site.xml with value /etc/hadoop/conf/rack-topology.sh

- We need to have mapping file in the location as referred by the script. In the below script, it is using rack-topology.data in the current directory itself and hence we need to have file with mapping information under /etc/hadoop/conf itself.

- Restart HDFS using commands

Refer below sample scripts that needs to be copied to Hadoop Configuaration directory (/etc/hadoop/conf/)

https://gist.github.com/dgadiraju/717d674bd7a72e2ca538407f1486652a

By this time you should have set up Cloudera Manager, then install Cloudera Distribution of Hadoop, Configure services such as Zookeeper and HDFS. Also you should have understood some of the important concepts (if not all). If there are any issues, please reach out to us.

Make sure to stop services in Cloudera Manager and also shutdown servers provisioned from GCP or AWS to leverage credits or control costs